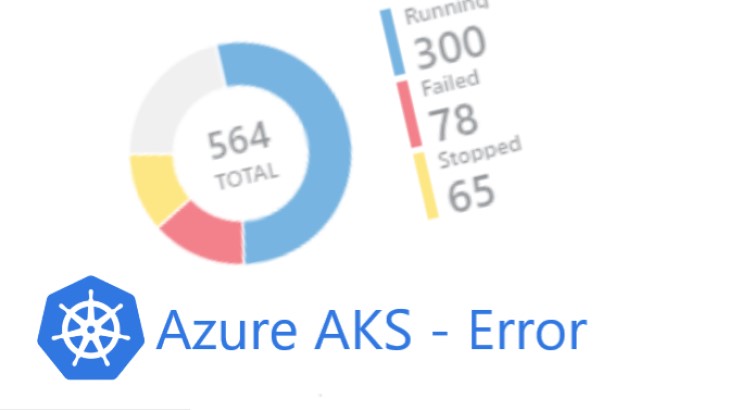

After upgrading Azure AKS Cluster to Version 15.7, I experienced an issue. The deployment was not able to re-attach some disks to the cluster nodes. The error message: “Attach volume failed, …, already attached to node” appeared in the cluster logs. You will find a quick solution for this error in this article.

First of all, I want to thank kwikwag for identifying the root cause and summarizing it on GitHub. For the reason that I was not following the steps in detail, I will summarize my solution here.

Attaching issue in Kubernetes

After performing a AKS Cluster upgrade, some pods where stuck in status “Container Creating”. In the logfile I was able to identify the following error messages:

Warning FailedMount 41m (x54 over 162m) kubelet, aks-agentpool-11111111-vmss000002 Unable to mount volumes for pod "mypod-86cd4f75d9-xxxxx_my-namespace(00000000-73d8-494a-9aa0-0000000000000)": timeout expired waiting for volumes to attach or mount for pod "my-namespace"/"my-pod-86cd7c75d9-xxxxx". list of unmounted volumes=[my-pod]. list of unattached volumes=[my-pod default-token-xxxxx]

Warning FailedAttachVolume 21m (x693 over 161m) attachdetach-controller AttachVolume.Attach failed for volume "pvc-000000000-1652-11ea-a59c-7eb26180ec6b" : compute.DisksClient

#Get: Failure responding to request:StatusCode=429 -- Original Error: autorest/azure: error response cannot be parsed: "" error: EOF

Warning FailedMount 2m3s (x17 over 38m) kubelet, aks-agentpool-11111111-vmss000002 Unable to mount volumes for pod "my-pod-86cd7c75d9-xxxxx_my-namespace(0000000000000)": timeout expired waiting for volumes to attach or mount for pod "my-namespace"/"my-pod-86cd7c75d9-xxxxx". list of unmounted volumes=[my-pod]. list of unattached volumes=[my-pod default-token-xxxxx]

Warning FailedAttachVolume 92s (x9774 over 144m) attachdetach-controller AttachVolume.Attach failed for volume "pvc-000000000-1652-11ea-a59c-7eb26180ec6b" : disk(/subscriptions/0000-0000/resourceGroups/MC_RG_XXXXXXX_westeurope/providers/xxx/disks/kubernetes-dynamic-pvc-000000000-1652-11ea-a59c-7eb26180ec6b) already attached to node(/xxx/MC_RG_XXXXXXX_westeurope/xxxx/aks-agentpool-11111111-vmss/x/aks-agentpool-11111111-vmss_1), could not be attached to node(aks-agentpool-11111111-vmss000002)

The really relevant part of this error message is:

Attach failed for volume "pvc-000000000-1652-11ea-a59c-7eb26180ec6b" already attached to node (aks-agentpool-11111111-vmss_1) could not be attached to node(aks-agentpool-11111111-vmss000002)So what does that mean? The disk is already attached to node #1 with the ID 0 (aks-agentpool-111111-vmss_1). During the attempt to deploy the pod with the disk on node #2 with ID 1 (aks-agentpool-111111-vmss_2) this fails.

In general, if you have issues with Azure AKS you should have a look at the Microsoft Documentation page.

Detach PVC manually from AKS Node

First of all, we identify the PVC disc which is not detaching from node #1.

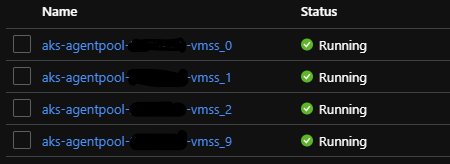

kubernetes-dynamic-pvc-000000000-1652-11ea-a59c-7eb26180ec6bIt might also makes sense to have a look at the instances in your Virtual Machine Scale Set in Azure to identify the nodes.

After that, we have to take a look at node vmss_1 to identify the LUN to which the pvc disc is attached. You can do this with the following command. “–instance-id 0” is the respective node of the Virtual Machine Scale Set.

az vmss show -g MC_RG_XXXXXXX_westeurope -n "aks-agentpool-11111111-vmss" --instance-id 0 --query "storageProfile.dataDisks[].{name: name, lun: lun}"The output of this command might be a bit longer, based on the attached volumes. But, you will be able to identify the disk you are looking for.

{

"lun": 4,

"name": "kubernetes-dynamic-pvc-000000000-1652-11ea-a59c-7eb26180ec6b"

},Once you have the LUN – in this case it is LUN #4 – identified, you can proceed with detaching of the PVC disc manually of the node.

az vmss disk detach -g MC_RG_XXXXXXX_westeurope -n aks-agentpool-11111111-vmss --instance-id 0 --lun 4The command will now detach the pvc disc from the VMSS instance and its config gets updated.

Redeploy POD after detaching

After all, you should redeploy your pod with containers manually by scaling down to 0 and then back to 1 copy. this can be performend with the following commands.

$ kubectl scale deployment my-pod --replicas=0 -n my-namespace

$ kubectl scale deployment my-pod --replicas=1 -n my-namespaceNow your POD will be in health state and back up and running. More tips & tricks are highly welcome 🙂

If you work with websites on Kubernetes, you might want to check out my post about securing Azure AKS with Let’s Encrypt certificates.